Lesson 13: Texture Filtering

In the previous lesson we implemented texture mapping to breathe some realism into our triangles. One thing we glossed over though is how the texture sampling and filtering process actually works.

Understanding these processes though can make a huge difference to the quality of our final rendered textures, so in this lesson we'll take a detailed look at how texture filtering works, and implement techniques known as mipmapping and anisotropic filtering to make our textures look great on screen.

Sampling

Let's start by looking at what actually happens when we sample a texture.

As we saw in our lesson on how the GPU works, when we render triangles to our window they will go through a process called rasterisation, which outputs the set of pixels which the triangle occupies on our window. A fragment shader program is then run for each of these pixels to calculate what the new colour of the pixel should be.

Let's think about what happens when a fragment shader is run for one of these pixels when we render a triangle. Each of the triangle's three vertices has an associated UV coordinate along with it's regular spatial coordinates. When our fragment shader looks up it's UV coordinate, it will receive a weighted average result from the three vertices depending on how close it is to each of them.

In the simplest possible scenario, when our fragment shader takes this interpolated UV coordinate and a texture, and looks up the texture's colour at that position, it will fall exactly on one of the texture's pixels. In this case, which RGB value the fragment shader should use is clear.

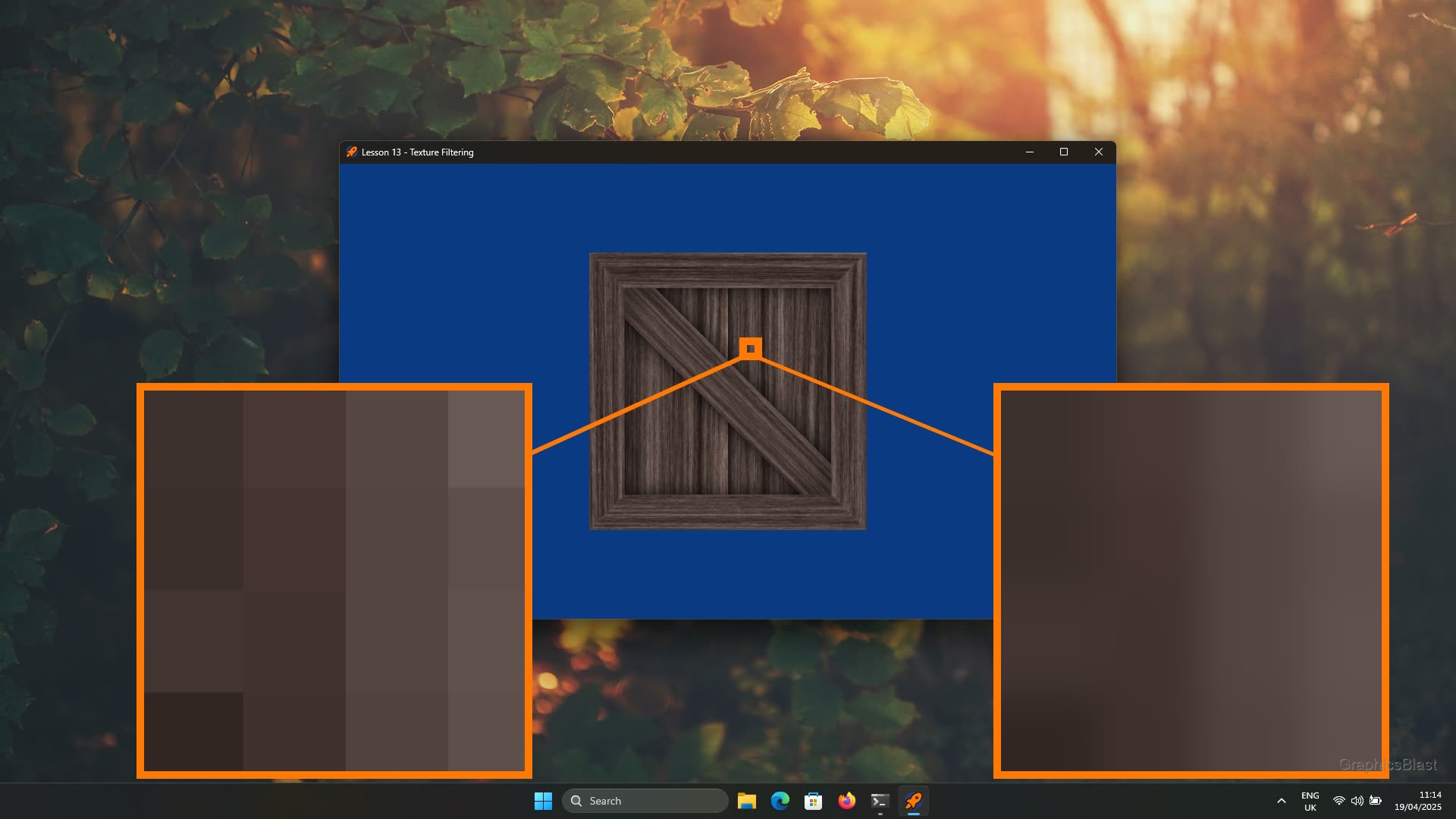

When the fragment shader's UV coordinate does not nicely correspond to an exact pixel in the texture though, but instead somewhere in between some pixels, we have a few strategies open to us to decide how to proceed.

In the previous lesson, we opted for GL_NEAREST as our sampling strategy.

This is the most basic setting, and tells our fragment shader to simply return the RGB data of whichever texture pixel (or "texel") is closest to the UV coordinate we provided.

Of course if we look up exactly between two texels, the GPU driver will be forced to choose one of them, usually rounding the UV coordinate up.

On the surface this strategy seems reasonable. But what tends to happen when we use this in the real world is that our textures can end up looking skewed, awkward and pixelated in certain circumstances.

Consider a scenario where our texture contains a distinct vertical line which is only a single texel thick. Well, if it happens that when we render our scene, we end up with fragment shaders being executed either side of this line, and both sides sample the line as their nearest pixel, we can end up with the final rendered image containing the line which is now two pixels thick.

The line from the texture is now overly dominant in the final rendered image, at the expense of other features in the texture. The strategy results in the final image containing a slightly distorted version of the original texture.

Worse still, moving the camera fractionally can change the situation such that now only a single fragment shader "catches" the line as it's nearest texel. The line in the rendered image suddenly becomes much less prominent. This can happen with very small movements of the camera, and causes a flickering effect in the final image known as shimmering.

One way to improve this is to switch to a linearly interpolating sampler.

In OpenGL this can be done by simply changing our GL_NEAREST parameter to GL_LINEAR.

A linearly interpolating sampler means that if we try to sample a texture between it's texels, we should instead interpolate between the nearest texels to come up with a weighted average value depending on how close the UV coordinate is to the texels.

The result of this is a much smoother looking final rendered image. In the above scenario when we sample around a single texel thick line, instead we'll end up with each pixel being somewhat influenced by the line's RGB values based on how close it is. The final rendered image will be somewhat blurred to fit the misalignment of UV coordinates and texels, which is a much better way to handle these situations than jarring pixelisations of the texture, and our eyes tend to not to notice it as much.

So in this case, why would we ever want to use GL_NEAREST? Why does that code path still exist?

Nope, it's nothing to do with performance! GPU's have been doing linear interpolation of textures for a long time and so the hardware is generally highly optimised to do this.

Well, the reason is that sometimes we don't want to have the blurring associated with the linear interpolation, especially when we're not using a texture for the purpose of texture mapping.

We can sometimes use textures to instead indicate specific things to our fragment shader, for example if the texture contains "0", the fragment shader should not calculate something, but if "1" then it should.

Ok, a fairly unrealistic example, but the logic is there.

This would work fine with GL_NEAREST, but GL_LINEAR would result in a fragment shader on the boundary getting values like 0.6, and the appropriate behaviour might not be clear.

Forcing our texture sampler to either pick one texel or another is useful in this case.

It's therefore useful for us to be able to configure our sampling behaviour, but probably a more reasonable default for us to use is GL_LINEAR for a more natural looking image.

In OpenGL this can be set for the currently bound texture like this:

| 1. | |

| 2. | |

Where here we've used linear interpolation for both the minification and magnification filter (the sampled texels being both larger and smaller than the fragments).

As we encapsulated these functions in our class, we can also set these parameters for our texture objects externally like this:

| 1. | |

| 2. | |

Which will do exactly the same, but makes sure the texture is bound and then update the parameters.

Edge Sampling Parameters

Related to how we sample between texels is how we sample at the edge of a texture.

If we have a UV coordinate of exactly 1.0 in one of our axes, that tells us we need to look up the colour data at the very outside edge of a texture. However, we have to remember that the texels themselves occupy some physical area. Texels are defined at their centre, so hypothetically if a texel was positioned at the UV coordinate 1.0, some of it's area would be past the edge of the texture. This is obviously not possible, the outer edge of a texture must be at the outside of a texel, not at it's centre.

Therefore, edge texels must have a UV coordinate of less than one. It must be (well, it's centre must be) somewhere inside the texture. This also applies at the start too - the first texel of an image will not have a UV coordinate of zero, but something greater than zero.

While not a problem if we're using GL_NEAREST as our sampling technique, if we're using GL_LINEAR and sample right at the edge, we need to think about how OpenGL will handle this.

What would it be linearly interpolating between?

There are 3 possible settings that OpenGL allows for sampling at the edges.

The first is GL_CLAMP_TO_EDGE.

This effectively locks the UV coordinates to within the texture, and performs sampling from the nearest valid position if they lay outside.

The second and third possible options are GL_REPEAT and GL_MIRRORED_REPEAT.

These parameters mean that if we sample around the edge of our texture, OpenGL should just imagine the texture is repeated or tiled.

If the texture is tiled, then the interpolation can just be performed between the tiled texels.

If we use this approach, then we can actually use UV coordinates in our triangles outside of the usual range of (0,0) to (1,1). For example with our square we're rendering, we can assign it UV coordinates ranging from (0, 0) to (2, 2) and tell it to sample in this way, and the texture will be seamlessly tiled twice across our triangles without any changes to our fragment shaders.

If we've told OpenGL to sample our texture using GL_REPEAT, then an attempt to sample a UV coordinate at (1.1, 1.1) will be exactly the same as sampling it at (0.1, 0.1).

If we sampled (-0.1, -0.1), this would be the same as sampling (0.9, 0.9).

In effect it means we're taking the modulo of the texture coordinate between 0 and 1.

This would result in the texture being tiled twice in each axis, and is useful for rendering things like floors or walls, or anywhere we want this tiling repetitive texturing.

For GL_MIRRORED_REPEAT, the effect is the same just that every other tiled texture has effectively been flipped.

These sampling parameters in OpenGL are set like this:

| 1. | |

| 2. | |

Again this will apply to the currently bound texture only, and we can call it on one of our texture objects like this:

| 1. | |

| 2. | |

Here, S and T are just used instead of the more common U and V for texture coordinates.

Note that a tiling behaviour with GL_REPEAT is actually the default behaviour of OpenGL, so you don't actually need to make these calls if that's your preferred strategy.

Mipmapping

While the above settings definitely give us better control of our textures and make them look better, if we view our image with much more pronounced magnification or minification, it's still possible to see some strange artefacts.

If we again work with our example of a texture with a thin contrasting line on it, the line can still seem to twinkle, shimmer, and even disappear completely when viewed from far away. The more sharp, contrasting and fine detail we have in the original texture, and the further it's viewed from, the more this happens.

What's happening is that even if we use GL_LINEAR to sample the texels around our interpolated UV coordinate, under heavy minification, some texels may not be sampled at all.

While linear interpolation takes into account several texels around where we want to sample the texture, there may be a large gap to the next pixel/fragment shader's UV coordinate.

In this gap, some important texture details may lie, and not get sampled or taken into account at all.

If we then move the camera very slightly, this fine detail may now make it into the rendered scene, but perhaps some other detail becomes lost. The end result is that as we move our camera, distant object will shimmering, with details sometimes visible and sometimes not. Not great.

Well, that's where mipmapping comes in.

Mipmapping is a way to avoid this, and actually improve GPU performance at the same time!

The idea is that for every texture we upload to our GPU, say 512x512 texels, we also downsize the image by half to 256x256, and upload that version of the texture too. Then we half it again to 128x128 and upload that too, and so forth all the way down to a 1x1 sized texture. There is of course a slight memory overhead to this (mathematically it requires 1⁄3 extra memory), but memory is cheap and plentiful these days, and visual quality and performance are definitely more important.

Let's imagine an extremely distant triangle being rendered with a large texture. If the entire triangle only occupies a few pixels on screen, then sampling repeatedly from a 4096x4096 resolution texture is slightly overkill. If mipmapping is enabled, OpenGL will recognise this mismatch in size, and instead sample from the much smaller downscaled version of the texture instead.

What happens is that because we're sampling from a smaller texture, there's less memory bandwidth pressure than using the original full sized image. The caching performance of the GPU is improved too. So that's where the performance gains of using mipmapping comes from.

But secondly, and more importantly, a single texel of the downsized image is an average of the high-resolution texture's texels for that area. Rather than sample a random texel from the original texel, we're instead sampling a texel which is in effect an average of all the texels in this area of the texture. So rather than potentially landing on or excluding small details when we sample, which changes with small camera movements, we'll instead consistently get an average of the area.

Using these downscaled images when there is a mismatch between texture size and screen size gives us a much better approximation of the original texture in our rendered triangle, giving us better visual quality and less rendering artefacts like shimmering.

Mipmapping considerations

OpenGL will actually do most of the work for us to get mipmapping working. It provides us with functions to automatically generate the different sizes or 'levels' of mipmaps (the individual downsized images) when we upload a texture to the GPU.

As the process potentially involves memory allocations and buffer resizing, this really should be done at program initialisation though, rather than at runtime. This means we need to know before we load (or reload) the texture if we will use mipmapping for it.

Another thing we need to consider is that while previously we said that OpenGL can automatically select which mipmap level to use depending on what size the final rendered image is, there is a bit more to take into account. Once again, this is actually a configurable parameter and we can do some clever things here! Just like with regular sampling, OpenGL can select which ever mipmap is closest in size to the triangle on screen we're rendering. But just like texture sampling, if our triangle is mid-way between the sizes of two mipmaps, OpenGL can actually calculate what the resulting value would be for both mipmaps, and then linearly interpolate between these!

NOTE: Historically, there were restrictions on using mipmapping on textures that are not power-of-two sizes. It's clear how we can repeatedly downsize a 512x512 pixel texture, but not necessarily for 123x321. Long ago this was a big issue, but all mainstream GPUs for the last few decades handle this situation for you - it's not something to worry about!

Anisotropic Filtering

Closely related to mipmapping is a technique called anisotropic filtering. While mipmapping generally improves the appearance of our textures, there is a small edge which exists which can still make our textures look less crisp than expected.

Consider viewing a texture mapped triangle which is at a highly oblique angle relative to the camera - part of it is very close to the camera, while part of it is very far away. With mipmapping the GPU driver will automatically select an appropriate mipmap for us, but in this case the choice is difficult. For the part of the surface close to the camera, we want to use a high resolution mipmap to maximise the level of detail. On the other hand for the part far away from the camera, we want to apply a low resolution mipmap else we'll have the previous issues with the texture not quite look right because we're applying a highly detailed texture onto very few screen pixels.

This is the edge-case anisotropic filtering aims to solve.

The idea is that instead of sampling the texture exactly where the UV coordinate is, the GPU will also take a few extra samples around it.

How anisotropic filtering is actually implemented is GPU specific, but essentially the GPU will try to estimate the size of the window's pixel on the texture. Then, instead of taking using the fragment's interpolated texture coordinate (which will be at the centre) to sample the texture, it will attempt to take multiple samples. These multiple samples can be positioned in a smart way, taking in to account the camera's position relative to the triangle, and the various mipmapping levels. The aim is to take multiple samples such to fill the area, which will give us a much better approximation of the true colour of the texture across the area the screen pixel occupies.

Usually, the number of samples taken are in multiples, for example taking 2x the number of samples, 4x, 8x, or 16x. The exact numbers possible are hardware specific, but most commonly double each time up to a maximum of 16x.

16x the number of samples is usually a hardware imposed limitation, and to be honest at the point we're doing 16 times the number of samples per pixel, the problem is pretty much resolved anyway.

Note that what we're actually providing is not the number of samples the GPU must take, but a maximum number of values the GPU can use. So if the GPU determines that our on-screen pixel exactly corresponds to a single texel, it can just perform a single lookup and the value will be exactly the same as if anisotropic filtering had not been used. In effect, this algorithm is selectively applied only when needed.

The technique is especially powerful when paired with mipmapping as the GPU can not only use multiple samples, but also in effect multiple sized samples from the texture. This allows the pixel's area to be more efficiently filled from the texture, and for a pixel to get the most data for the area of the texture it should cover. So anisotropic filtering is almost always paired with mipmapping to get the best possible texture quality.

Like with mipmapping, anisotropic filtering has been a mainstay of GPU rendering for decades. As a result, the performance implications of using it is minimal, while the improvement in quality when using it is dramatic. Again, the improvement is only visible in situations where the geometry being rendered is at a very acute angle relative to the camera, while also being quite large. But when this does happen, it's well worth the time to implement.

The great thing is that because this trick is performed by the GPU driver, we don't actually need to do much besides enabling it. For each texture we want to use it with, we simply need to set how many extra texture samples we want to use, and our textures will (in certain situations) just look better.

Updating our texture class

Let's now see how we can implement these ideas into our texture class from the previous lesson.

We'll add three new variables to our texture header file (texture.h).

These will control whether we should use linear interpolation between texels when sampling (falling back to taking the nearest texel to the UV coordinate if not), whether we should generate mipmaps when loading the texture, and how many extra anisotropic samples we can use.

| 8. | class Texture |

| 9. | { |

| 10. | public: |

| 11. | Texture(); |

| 12. | |

| 13. | void setFilename(string newTextureFilename); |

| 14. | bool loadTexture(); |

| 15. | void deleteTexture(); |

| 16. | |

| + 17. | |

| + 18. | |

| + 19. | |

| 20. | void setParameter(GLenum parameter, int value); |

| 21. | |

| 22. | void bind(); |

| 23. | void unbind(); |

| 24. | |

| 25. | GLuint getHandle(); |

| 26. | string getFilename(); |

| 27. | string getError(); |

| 28. | |

| 29. | private: |

| 30. | string filename; |

| 31. | |

| + 32. | |

| + 33. | |

| + 34. | |

| 35. | |

| 36. | GLuint textureHandle; |

| 37. | string errorMessage; |

| 38. | }; |

We add the variables as well as their setter functions to the class.

Again just as a reminder, we'll write these functions under the assumption that they are to be called/set before (re-)loading the texture. Changing the method of sampling or number of anisotropic filters is not so bad, but generating mipmaps is something that only needs to be done once, and is relatively expensive, so can just be done when the texture is created.

In our texture code (texture.cpp), we'll then update our constructor to add some default values to these variables:

| 4. | Texture::Texture() |

| 5. | { |

| 6. | textureHandle = 0; |

| 7. | |

| + 8. | |

| + 9. | |

| + 10. | |

| 11. | } |

By default we'll interpolate between texels, enable mipmapping, and use up to 16x the number of samples for anisotropic filtering.

These settings are fairly reasonable defaults for textures, although as we mentioned we definitely will need to be able to turn mipmapping and interpolation on and off in certain cases.

We can then implement our setter functions to allow us to change these variables:

| 128. | void Texture::deleteTexture() |

| 129. | { |

| 130. | glDeleteTextures(1, &textureHandle); |

| 131. | textureHandle = 0; |

| 132. | errorMessage = ""; |

| 133. | } |

| 134. | |

| + 135. | |

| + 136. | |

| + 137. | |

| + 138. | |

| + 139. | |

| + 140. | |

| + 141. | |

| + 142. | |

| + 143. | |

| + 144. | |

| + 145. | |

| + 146. | |

| + 147. | |

| + 148. | |

| + 149. | |

| + 150. | |

| + 151. | |

| + 152. | |

| + 153. | |

| 154. | |

| 155. | void Texture::setParameter(GLenum parameter, GLint value) |

| 156. | { |

| 157. | bind(); |

| 158. | glTexParameteri(GL_TEXTURE_2D, parameter, value); |

| 159. | unbind(); |

| 160. | } |

Our setters are straight-forward. Here we ensure that our anisotropic filtering is set to at least a value of 1. Using a value of 1 as the maximum number of samples is in effect not doing any anisotropic filtering, but numbers below this really don't make any sense.

Now we can update our loadTexture function to make use of these new variables:

| 76. | glGenTextures(1, &textureHandle); |

| 77. | glBindTexture(GL_TEXTURE_2D, textureHandle); |

| 78. | |

| 79. | glTexImage2D(GL_TEXTURE_2D, 0, GL_RGBA8, surfaceRGBA->w, surfaceRGBA->h, 0, GL_RGBA, GL_UNSIGNED_BYTE, surfaceRGBA->pixels); |

| 80. | |

| + 81. | |

| + 82. | |

| + 83. | |

| + 84. | |

| + 85. | |

| + 86. | |

| + 87. | |

| + 88. | |

| + 89. | |

| + 90. | |

| + 91. | |

| + 92. | |

| + 93. | |

| + 94. | |

| + 95. | |

Previously after generating a texture on the GPU and copying the image data into it, we just set our minification and magnification filters to both use GL_NEAREST sampling.

This time, if mipmaps are enabled, we make a call to glGenerateMipmap(GL_TEXTURE_2D).

This will generate all the ever-smaller mipmaps necessary for the currently bound (2D) texture.

It will automatically handle everything for us, including all the memory allocations.

One call and we're done!

With the mipmaps generated, we can set our sampling parameters depending on whether we want to interpolate between the texels, or just use the nearest texel to our UV coordinate as we did in the previous lesson.

For the magnification filter, things are simple.

If we want to use linear interpolation, we use GL_LINEAR, and if not we use GL_NEAREST.

For the minification filter on the other hand, as we're using mipmaps, we also need to explain how we select which mipmap to use. It's highly unlikely that the size of the triangle we're rendering on screen exactly matches the resolution of a mipmap level, therefore we have two approaches to solve this. The first option is to simply choose the mipmap with the resolution closest to the size of the triangle and sample from that. The second option is to take the two nearest mipmap levels, and perform the sampling with both, and simply average the result at the end. So we have one more parameter we can control here.

This is controlled using the same sampling parameter we've used before.

So in the code above, when we're sampling a texture with mipmapping, and using linear interpolation, we use GL_LINEAR_MIPMAP_LINEAR.

This tells OpenGL that not only do we use GL_LINEAR to interpolate between texels, but for the mipmap levels we should calculate the value for both of the two closest mipmaps, and then interpolate the result (hence the _MIPMAP_LINEAR).

If we don't want to use linear interpolation, we use the flag GL_NEAREST_MIPMAP_NEAREST to indicate that we want to just use the nearest texel to our UV coordinate (GL_NEAREST), and to simply use the nearest mipmap level to the current size of the triangle on screen (_MIPMAP_NEAREST).

The various combinations of flags are also possible (ie. GL_LINEAR_MIPMAP_NEAREST and GL_NEAREST_MIPMAP_LINEAR) should you wish to use them!

Next, let's look at how we sample the texture if we don't want to use mipmapping:

| 93. | glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_NEAREST); |

| 94. | } |

| 95. | } |

| + 96. | |

| + 97. | |

| + 98. | |

| + 99. | |

| + 100. | |

| + 101. | |

| + 102. | |

| + 103. | |

| + 104. | |

| + 105. | |

| + 106. | |

| + 107. | |

| + 108. | |

Much simpler!

If we're using linear interpolation for this texture, we use the sampling flag GL_LINEAR for both magnification and minification, else we fall back to using the nearest texel for each with the GL_NEAREST flag.

Next, we can look at configuring anisotropic filtering:

| 106. | glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_NEAREST); |

| 107. | } |

| 108. | } |

| 109. | |

| + 110. | |

| + 111. | |

| + 112. | |

| + 113. | |

| + 114. | |

| + 115. | |

| + 116. | |

We have to consider that the hardware the program is running on may not support the number of anisotropic samples we want. If this happens, the GPU will try it's best and give you the maximum number it supports. To be safe, we'll first poll the maximum number supported on the current machine.

We create a GLint and ask OpenGL to copy the maximum number of anisotropic samples allowed using the glGetIntegerv function.

The OpenGL symbol might seem a little tautological (GL_MAX_TEXTURE_MAX_ANISOTROPY), but the logic is that we're setting the maximum number of anisotropic filtering samples, and to get the upper bound for this we ask OpenGL for the maximum number of samples we can set the texture's maximum anisotropic filtering samples to!

When we have this we make sure that our anisotropyFilters variable doesn't exceed it.

Note that the maximum number of samples that can be used is tied to the type of texture currently bound (ie. 2D, 3D, etc.), so it's important that the texture is bound before making this call.

With that in place, we can finish off our updated loadTexture function by now setting the maximum number of anisotropic samples to use:

| 113. | if(anisotropyFilters > maxAnisotropyFilters) |

| 114. | { |

| 115. | anisotropyFilters = maxAnisotropyFilters; |

| 116. | } |

| 117. | |

| + 118. | |

| 119. | |

| 120. | SDL_DestroySurface(surfaceRGBA); |

| 121. | SDL_DestroySurface(surface); |

| 122. | |

| 123. | unbind(); |

| 124. | |

| 125. | return true; |

| 126. | } |

This is done just like the rest of the sampling parameters, instead setting the GL_TEXTURE_MAX_ANISOTROPY parameter.

With that, we're done! By default we now have mipmapped textures using anisotropic filtering and linear interpolation of the texels! We also have a way to control these settings before the texture is loaded, which we'll definitely need for the future!

Note that we don't actually need to explicitly set the edge sampling/wrapping parameters in this function. We could, but I think the best default setting for this is to assume the textures are repeating by default, which is actually OpenGL's default setting anyway. So this is already done for us! If you want to test this, you can modify the UV coordinates for our triangles to go from 0 to 3, and you'll see the texture tiled across the window that many times.

Summary

That's all for our lesson on texture sampling. Our graphics should now be looking much sharper!

Again while the anisotropic filtering parameter can be changed at any time, for best results it should really be paired with mipmapping. Changing the mipmapping state meanwhile won't actually make any changes until you (re-)load the texture.

In the next lesson we're going to look at model loading, and start fleshing out our virtual world. See you there!